The History and Future of ER Diagrams: How AI is Transforming Data Modeling

Text by Takafumi Endo

Published

As a Liam ERD team, We’ve often found that revisiting the origins of Entity-Relationship (ER) Diagrams offers fresh perspectives on today’s challenges. By looking at how ER modeling was born and has evolved over the decades, we can better understand both our current design philosophy and where we might be heading. If you’re in the thick of database design—or just curious about it—this story might help guide you toward the next phase of data modeling.

The Birth of ER Diagrams (1976)

Back in 1976, Peter Chen introduced a revolutionary idea in his paper, “The Entity-Relationship Model: Toward a Unified View of Data.” He basically gave us the blueprint for how to visualize databases at a conceptual level—think of it like the difference between drawing a map on paper and actually walking the terrain.

- Entities are the “things” in your domain (like Customers, Orders, or Products). In relational databases, they usually correspond to tables, with attributes that define their properties.

- Relationships spell out how these entities connect: one-to-one, one-to-many, or many-to-many.

- Attributes hold the details about your entities or relationships—like the color of a product or a timestamp for an order.

- Keys ensure we can find what we’re looking for without mixing up different entities (primary keys, foreign keys, and so on).

- Constraints keep the data consistent, preventing nonsense values from creeping in.

[DEPARTMENT] --- (WORKS_FOR) --- [EMPLOYEE] --- (ASSIGNED_TO) --- [PROJECT]

1 (1:N) N (M:N) M

| | |

{Dept_ID} {Emp_ID} {Project_ID}

{Name} {Name} {Title}

{Location} {Position} {Budget}Chen’s framework encouraged designers to think carefully about how data should be organized before writing a single line of implementation code. This “plan first, build later” approach helped reduce duplication, improved data quality, and guided everything from indexing strategies to normalization. And even though his model originally focused on relational databases, the ideas behind ER modeling have since influenced everything from NoSQL document stores to graph databases. It’s no exaggeration to say that ER diagrams laid the groundwork for modern data management, no matter where you’re storing your bits and bytes.

The Expansion of Enhanced ER Models (1980s)

Entering the 1980s, databases became increasingly complex. Ramez Elmasri and Shamkant Navathe, particularly in their book Fundamentals of Database Systems, extended Chen’s model and introduced the Enhanced Entity-Relationship (EER) model.

A major innovation of this era was the integration of supertype–subtype structures, effectively enabling a form of inheritance. For instance, you could have a general “Person” entity and then specialized entities like “Student” or “Staff,” each with its own unique attributes. By leveraging generalization and specialization, you can build more natural data models that mirror real-world categorization.

-----------------------------------------------------

-- 1. Person (Supertype)

-----------------------------------------------------

CREATE TABLE Person (

person_id INT PRIMARY KEY,

name VARCHAR(50) NOT NULL

);

-----------------------------------------------------

-- 2. Student (Subtype)

-- Uses the primary key of Person

-----------------------------------------------------

CREATE TABLE Student (

person_id INT PRIMARY KEY,

student_number VARCHAR(10) NOT NULL,

FOREIGN KEY (person_id) REFERENCES Person(person_id)

);

-----------------------------------------------------

-- 3. Staff (Subtype)

-- Uses the primary key of Person

-----------------------------------------------------

CREATE TABLE Staff (

person_id INT PRIMARY KEY,

staff_number VARCHAR(10) NOT NULL,

role VARCHAR(20) NOT NULL,

FOREIGN KEY (person_id) REFERENCES Person(person_id)

);EER models also allow for finer-grained constraints, such as whether each supertype instance must belong to exactly one subtype or can belong to multiple subtypes simultaneously. Although these details may appear subtle, they prove crucial in fields like finance, manufacturing, and healthcare. Furthermore, the rise of advanced modeling tools (like ERwin) demonstrated the practical benefits of EER concepts, helping database designers create well-organized and efficient systems.

The Rise of Object-Oriented ER Models (1990s)

In the 1990s, object-oriented programming attracted widespread attention, and database modeling followed suit. Batini, Ceri, and Navathe explored object-oriented concepts within advanced conceptual modeling in “Conceptual Database Design: An Entity-Relationship Approach” (link). These developments paved the way for object-oriented database modeling—often referred to as the Object-Oriented ER (OOER) model—and influenced a broad range of researchers and publications of that time.

In an OOER model, entities can encapsulate not only data but also behavior (methods), reflecting class-and-object concepts from programming. Inheritance plays a central role, allowing child entities to reuse attributes from their parent, while polymorphism lets them behave differently depending on context. However, implementing this behavior directly in a relational database is not straightforward. In practice, most systems use ORM tools (like Hibernate or Entity Framework) to map classes and objects to tables, leaving methods and business logic in the application layer.

Below is an example of Oracle’s object-relational features (introduced in the 1990s). Here, PersonType acts like a parent class (supertype), and StudentType/StaffType inherit its attributes. Notably, the tables themselves are managed as objects, and inheritance is expressed using the UNDER keyword.

-----------------------------------------------------

-- Example of Oracle Object Types and Inheritance

-----------------------------------------------------

-- 1. Define a PersonType (supertype)

CREATE OR REPLACE TYPE PersonType AS OBJECT (

person_id NUMBER,

name VARCHAR2(50)

) NOT FINAL;

/

-- 2. Define subtypes (StudentType / StaffType)

-- inheriting from PersonType

CREATE OR REPLACE TYPE StudentType UNDER PersonType (

student_number VARCHAR2(10)

);

/

CREATE OR REPLACE TYPE StaffType UNDER PersonType (

staff_number VARCHAR2(10),

role VARCHAR2(20)

);

/

-----------------------------------------------------

-- 3. Create an object table for Person,

-- and then tables for Student/Staff under it

-----------------------------------------------------

CREATE TABLE Persons OF PersonType (

CONSTRAINT pk_person_id PRIMARY KEY (person_id)

);

CREATE TABLE Students OF StudentType (

CONSTRAINT pk_student_id PRIMARY KEY (person_id)

) UNDER Persons;

CREATE TABLE StaffMembers OF StaffType (

CONSTRAINT pk_staff_id PRIMARY KEY (person_id)

) UNDER Persons;By leveraging Oracle’s object-relational extensions—representative of the research and products of the 1990s—you can represent classes, inheritance, and specialized object tables directly within the database. Although many organizations still prefer to keep application logic outside the database, OOER models marked an important step in bringing conceptual design closer to object-oriented principles. Tools like Rational Rose allowed designers to visualize these object structures using UML-style diagrams, substantially enhancing collaboration between software engineers and database architects during the object-oriented boom of the 1990s.

UML and ER Modeling (2000s)

Toward the end of the 1990s and into the 2000s, the Unified Modeling Language (UML) took center stage. Spearheaded by Grady Booch, James Rumbaugh, and Ivar Jacobson, UML built upon ER concepts and generalized them into a broad, standardized language for modeling software systems.

UML’s class diagrams often stood in for ER diagrams, capturing similar ideas but within a full-fledged software design context. And UML wasn’t just about data structure; it also included use case diagrams, sequence diagrams, and deployment diagrams that offered a much wider picture of how an application behaved. Plus, with OCL (Object Constraint Language), you could specify detailed rules to keep data valid, bridging a gap that sometimes existed between conceptual models and real-life database constraints.

UML Class Diagram Example

The following image illustrates how a UML class diagram can represent the same relationships found in a typical ER diagram—specifically, the one-to-many link between users and orders, as well as between orders and products.

For the detailed PlantUML code, see here.

It’s the same conceptual approach you’d see in a traditional ER diagram, but using UML notation and syntax that integrate smoothly into broader software modeling practices.

Personally, I first encountered ER diagrams while studying computer science, alongside UML. At the time, UML’s structured approach to software modeling made a strong impression on me, but I quickly realized how ER diagrams provided a similarly clear and intuitive way to represent database structures. Learning both in parallel helped me see how data modeling and system architecture are deeply interconnected—an insight that continues to influence my work today.

The rise of Model-Driven Architecture (MDA) made these diagrams even more valuable, letting architects automate the creation of physical databases from these conceptual models. Tools like Oracle Designer showed how UML notations could be deeply integrated with database engineering, bridging the worlds of software developers and DBAs more smoothly than ever.

ER Diagrams in the Big Data Era (2010s)

Come the 2010s, big data took the spotlight, often revealing the limits of traditional relational systems when it came to massive or highly variable datasets. Renzo Angles and Claudio Gutierrez outlined how graph databases reimagined “entities and relationships” as nodes and edges, making them perfect for highly interconnected data—like social networks or recommendation engines.

Meanwhile, document-oriented databases (like MongoDB or Firebase) introduced the idea of semi-structured data, letting you store flexible, nested documents without the rigid constraints of relational schemas. The trade-off was that you had to be more careful about data consistency and duplication, but it paid off in speed and agility.

Distributed systems also became the norm, relying on partitioning and sharding to handle massive workloads worldwide. And in the midst of all this, streaming frameworks (Kafka, Flink, Spark) brought real-time data processing to the front, pushing database design into an event-driven world. Even so, ER modeling principles continued to provide a conceptual anchor, helping teams manage a mix of batch and streaming data with tools like Lucidchart for quick, collaborative diagramming.

ER Diagrams and Cloud Databases (2020s)

By the 2020s, the cloud had matured into a full-fledged platform for distributed, scalable data management. Daniel J. Abadi highlighted in his 2009 paper (“Data Management in the Cloud: Limitations and Opportunities”) the challenges of scaling and availability in cloud environments. Although Abadi’s 2009 paper primarily focuses on elasticity, partitioning, and cost in cloud environments, it indirectly informs how we adapt classic ER modeling principles to large-scale, distributed systems—particularly in terms of data partitioning, replication strategies, and multi-tenant schema designs. Suddenly, we had multi-tenant designs, serverless databases, and an ongoing debate over strong vs. eventual consistency.

- AWS DynamoDB, Google BigQuery and other serverless databases offloaded operational tasks like scaling and replication.

- Sharding and partitioning became everyday concerns for big applications.

- Machine learning started stepping in to optimize schema design, suggesting indexes or automatically rebalancing resources based on workload patterns.

At the same time, distributed SQL solutions (like CockroachDB or YugabyteDB) promised both horizontal scaling and ACID compliance, offering new frontiers for applying ER ideas. Tools such as dbdiagram.io made it simpler than ever to draw these diagrams and keep them in sync with real cloud databases.

The Role of Generative AI in ERD Automation (Present)

In recent years, AI has completely transformed how we approach data modeling. Multiple research projects have demonstrated that large language models can parse plain English requirements and produce initial ER diagrams. Although some tools also incorporate functionalities like daptive indexing, data consistency management, and synthetic data generation, the table below focuses on five core features that best represent the present impact of generative AI in ERD automation. These features highlight AI’s growing role in ensuring that database architectures remain both flexible and robust as real demands evolve.

| Feature | Description | Case |

|---|---|---|

| Automatic Schema Generation | Translates user stories into schemas. | LLM-based code generator |

| Error Detection | Identifies normalization issues and inconsistencies early. | Preempt partial dependencies |

| Performance Optimization | Uses AI to improve queries and partitioning. | Rewrite suboptimal queries |

| Predictive Schema Evolution | Anticipates future schema requirements based on usage patterns. | Suggest new columns/tables |

| Probabilistic Schema Inference | Detects anomalies and refines schema using probabilistic models. | Flag unexpected NoSQL fields |

Some modern solutions go even further. For example, adaptive indexing dynamically reconfigures indexes when read or write operations become dominant, optimizing performance with minimal human oversight. Similarly, data consistency management ensures integrity across multiple systems, often by automatically resolving conflicts in near real-time—especially important for distributed databases. Additionally, synthetic data generation plays a critical role in testing, making it possible to create realistic but anonymized staging environments for sensitive or sparse datasets.

The dream of a self-managing, self-optimizing database is thus closer to reality than ever. Tools like GenSQL exemplify this new wave of AI-driven data management. Its probabilistic approach can enhance schema integrity while providing predictive insights. When MIT researchers compared GenSQL to other AI-based data analysis methods, they found it was 1.7 to 6.8 times faster, offering more accurate and explainable results. As AI-driven tools mature, they increasingly handle the “grunt work”—from schema design to performance tuning—with minimal human intervention.

Moreover, the integration of generative AI into database management allows schema evolution to be data-driven. GenSQL’s ability to detect anomalies, resolve errors, and generate synthetic data has proven to be a game-changer for organizations that need rapid, reliable schema adjustments. Its probabilistic models also introduce calibrated uncertainty, ensuring that the AI engine avoids overconfidence when data is underrepresented—a critical requirement in fields like healthcare and finance.

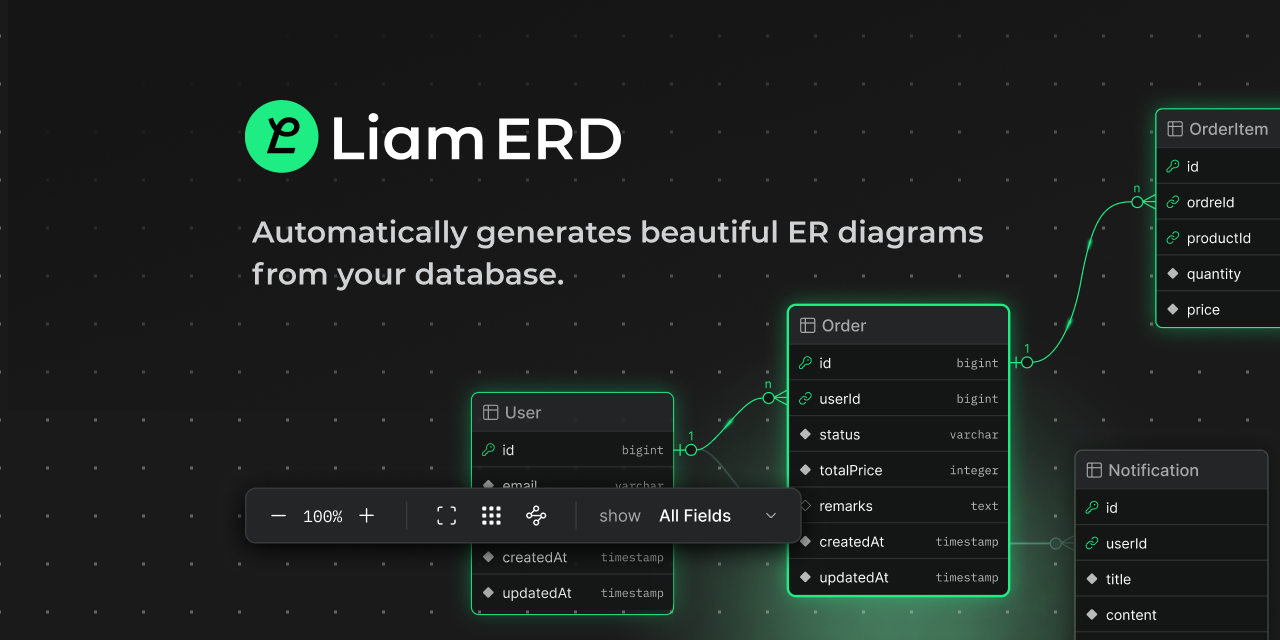

The Future of Data Modeling and Liam ERD

While developing Liam ERD, we’ve seen how quickly ER modeling has to adapt to new paradigms like multi-cloud deployments, big data analytics, and AI-driven optimization. At its heart, the ER diagram is still about clarity and maintainability—but it’s evolving beyond just a static visual tool.

Evolving ER Modeling with AI and Cloud-Native Environments

Modern systems change too fast for static diagrams to keep up. That’s why large language models (LLMs) are increasingly being used to turn diagrams into dynamic collaborators. These AI-driven tools can highlight potential design pitfalls, automatically spot performance bottlenecks, and provide real-time suggestions when new requirements emerge.

For simple CRUD applications, this evolution is particularly impactful. The time required to go from ER diagram design to API generation is shrinking rapidly, as AI-powered tools can now automate much of the schema definition, query optimization, and even API scaffolding. What once will have taken days of manual work will now be streamlined into a matter of minutes, accelerating development cycles and reducing human error.

We’re also weaving compliance and governance into the workflow. Imagine an AI assistant that alerts you if a proposed schema update violates a data privacy regulation or inadvertently exposes sensitive information. Low-code and no-code solutions are another big push, allowing non-technical stakeholders to help shape the database design without fiddling with SQL commands or schema definitions.

With distributed architectures becoming the norm, we’re working on AI-driven partitioning strategies that factor in cost, latency, and data residency requirements. And since many teams now juggle multiple database types—relational, graph, document, key-value—we want to let you manage all of that within a single conceptual schema.

Liam Merges Tradition and Innovation

Through this investigation, I’ve gained valuable insights into how ER modeling has not only endured but thrived through waves of technological change. From Peter Chen’s foundational ideas in the 1970s to the rise of big data and cloud-native systems, we’ve witnessed ER diagrams evolve from simple conceptual sketches into robust, adaptive design tools.

Yet most teams are still stuck juggling static diagramming software and new-gen data demands. At Liam, we’re reshaping ER diagrams into “living” assets that respond to workload spikes, regulatory updates, or sudden shifts in user behavior. By weaving AI-driven insights directly into the modeling process, we aim to help designers see potential schema issues, performance bottlenecks, or governance pitfalls long before they become production nightmares.

What’s truly exciting is how this harmonizes both old and new practices. We respect the solid theory behind classic EER and UML models—those foundational rules that keep data clean and consistent. But we’re also pushing the envelope with AI to automate schema evolution, suggest indexing strategies, and anticipate changes as your product matures. Instead of discarding the past, we’re turbocharging it for today’s high-velocity development cycles.

In short, we see a future where ER diagrams aren’t just static diagrams pinned to a whiteboard. They’re dynamic, intelligent companions, continuously learning from real data trends and guiding teams toward more resilient, compliant, and visionary data architectures. If you share this belief that ERD can be both grounded in history and boldly future-proofed, join us at Liam—and let’s redefine the next era of data modeling together.

That’s Liam ERD, a tool for effortlessly generating clear, readable ER diagrams. Give it a try.

Introducing Liam ERD - Liam ERD

References:

- Chen, P. P. (1976) | “The Entity-Relationship Model: Toward a Unified View of Data.”

- Elmasri, R., & Navathe, S. B. (1989) | Fundamentals of Database Systems

- Batini, C., Ceri, S., & Navathe, S. B. (1992) | Conceptual Database Design: An Entity-Relationship Approach

- Booch, G., Rumbaugh, J., & Jacobson, I. (2005) | The Unified Modeling Language User Guide

- Angles, R., & Gutierrez, C. (2008) | Survey of Graph Database Models

- Abadi, D. J. (2009) | Data Management in the Cloud: Limitations and Opportunities

- Khalil, R., & Ahmed, S. (2022) | “AI-driven Optimization of Relational Database Design Using Deep Learning

- Xiaohu Zhu, Qian Li, Lizhen Cui, Yongkang Liu | Large Language Model Enhanced Text-to-SQL Generation: A Survey

Text byTakafumi Endo

Takafumi Endo, CEO of ROUTE06, which develops Liam. After earning his MSc in Information Sciences from Tohoku University, he founded and led an e-commerce startup acquired by a retail company. He also served as an EIR at Delight Ventures.

Last edited on